My central idea was to use SSDT to build a dacpac file that would define the schema of our testing database and wrap that DACPAC artifact in a nuget package that testing projects could reference.

It’s not uncommon during early attempts to bootstrap CI and automated testing for an engineering team to start with ‘unit’ tests that read and write to a common development database. Truly, these should be considered more integration tests than unit tests, since they touch more than a single layer of the architecture, but that’s not the main drive of this particular post. My primary goal in adopting testing culture is increasing the amount of reliable and automated testing. Moving towards more pure unit tests will be a drive towards making our automated tests faster (and probably improved architecture)… but that is a problem for a future day.

The problem with having tests run against a centralized development database is the reliable part. That development database is fair game for any and all developers, QA, sales people, the CEO… you name it… for data updates, deletions, inserts and all sorts of shenanigans. That test you wrote that tries to pull the record for ‘Bugs Bunny’, and asserts that you get back one record works the first day you wrote it… and maybe for weeks after that. But one day, some engineer with an odd hatred for Bugs… I mean, who doesn’t like Bugs Bunny?… updates the name to Elmer Fudd. And now your test goes kaplooie.

The real insidious problem with this is that it will happen all the time… random tests failing… and not because the code is broken but because your test setup is flawed. It happens so much, that people stop paying attention to the tests (bad) or explicitly add Ignore attributes to them (worse). Now, when a real bug comes along as a result of actual code changes, you won’t catch them. Flaky tests are a leading cause for the failure of automated testing initiatives. Flaky test detection is even built into TeamCity:

So what to do we need to do to get away from writing our tests against this volatile data? Well, one way is certainly to mock the data/repository layer to return the data you want. This is certainly a good approach, but clearly not the focus of this post. For a variety of reasons, this may not be possible or even advisable. In the first place, your architecture may not lend itself very well to mocking out this layer. Furthermore, in many cases the data is being retrieved through stored procedures or other tiers which do a fair amount of logic and/or data transformation… mocking that away is limiting the usefulness of the automated test. Some will say ‘well, re-architect that piece and test each layer independently’… I respond ‘I’ll re-architect after my tests are in place’.

So leaving that whole argument aside (why do you keep bringing it up?!?), there’s another way to avoid this problem. We can create an isolated copy of our database that each test run can use, that is local to each build runner (so they don’t step on each other during runs) and can return to its original state not only after each test run, but after every test.

Now, I’ve done this in the past with a very proprietary approach. It was, I think, highly effective but also a fairly large investment in startup time and maintenance. There has to be a better way, I thought… enter SQL Server Data Tools (hereafter referred to as SSDT).

SSDT has been around a long time and been through a somewhat bewildering collection of incarnations. It was a standalone product, sometimes part of SSMS, later as a Visual Studio integration (its current incarnation). At its heart, it’s got a couple of core features. Number one, it can target a database instance and export the result as a collection of files inside your project. Number two, it can compare that current export to the target database and find the differences. And number three, it can update the target database with those differences. In its current incarnation, these tools manifest as a SQL Server Database Project in Visual Studio 2015. After importing a database into such a project, you can compile it into what’s known as a DACPAC. This is simply a file, with the .dacpac extension, this is essentially an archive of script files and some other metadata that can be compared and/or deployed to other ‘targets’.

My central idea was to use SSDT to build a dacpac file that would define the schema of our testing database and wrap that DACPAC artifact in a nuget package that testing projects could reference. In that way those testing projects can, at runtime, attach/deploy/update their local database with the latest schema before running their tests. As a pure abstraction, I want to say that my testing project has a dependency on this database, so that when that database changes (modify a table, change a column, change a stored procedure), I want to pull in those changes and run my tests against them.

I’ll be using Visual Studio 2015 for this adventure and I’ve updated to the latest and greatest version of SSDT using the Extensions and Updates manager.

So, first things first… Create a new Project in Visual Studio. Under Templates, choose SQL Server, and then choose SQL Server Database Project.

Now, right-click on your new Database Project and choose Import->Database. You have a few options here. I chose to ignore permissions and logins for these purposes since it introduces complications I don’t necessarily want to deal with just yet.

Once that’s complete, you’ll notice that there are a ton of new folders and script files in your database project. These represent the tables, views, stored procedures, functions, etc. that define your database.

Now, the fun part… try and build your project. This will attempt to ‘compile’ all of these scripts into a dacpac file. I’ve got to be honest… depending on the complexity of your database, this may be a showstopper for you. The build process is not very forgiving. You may have dead code in there… stored procedures that will no longer compile, references to linked databases that don’t exist, user and permission errors… that you may have to cleanup and/or delete to get the thing to compile. In my case, there were some hangups with a few logins I cleared up, and a few procedures referencing linked servers that didn’t exist and were no longer necessary. In those cases, we commented out the offending lines since the procedure was no longer in use. If you can get the thing to compile, it should produce a dacpac file… you should find it in your bin/Debug folder.

Now, if you can get that far, the next step is to get it to build on your CI server. I use TeamCity, but the steps should be similar on other CI tools. The cool thing is that, after a passive aggressive tweet about having to install Studio on my build agent, I was informed by a member of the SSDT team that they’ve just released a nuget package for bundling the SSDT targets and tools with your project which allows you to build using msbuild on your build agent:

https://www.nuget.org/packages/Microsoft.Data.Tools.Msbuild/

Now, I actually had Studio already installed on my build agent, and I think I’m over it at this point. This is what many CI tools suggest, it’s what they do at AppVeyor and what the TFS Build team recommends, so I’m done fighting. I’ve installed the community version on my build agent and moved on with my life. If you’re not there yet, you can add this new tools package to the Database Project and should be able to build with a pure MSBuild task.

Now that we’ve got that working, we can wrap the resulting dacpac file in a nuget package and then see how will we use it in the testing project. This is where it gets fun… many of you use nuget for packaging assemblies, but how about for other content?

First, you’ll need a nuspec file to define what’s in your package:

<?xml version="1.0"?>

<package>

<metadata>

<id>Databases.AcmeDatabase</id>

<version>$version$</version>

<authors>ACME, Inc</authors>

<owners>ACME, Inc</owners>

<description>Package for ACME dacpac</description>

<copyright>Copyright 2016</copyright>

</metadata>

<files>

<file src="bin$configuration$Databases.AcmeDatabase.dacpac" target="content" />

<file src="bin$configuration$Install.ps1" target="tools" />

</files>

</package>

There’s a couple of variables in there that are driven by the build server. $version$ is passed in by TeamCity using the %build.number% variable so that we get incremented package versions with each build, and configuration (Debug/Release) is also passed in. Both are passed during the nuget pack step.

The cool piece is the Install.ps1 file added to the packages tools folder. You can use this install file (it must be called Install.ps1) when the user installs the package to do install actions [More info here]. In this case, since it’s not a normal assembly reference, I’m going to add install code to copy the dacpac into the project and mark it to ‘Copy Always’ during the build process using the following code:

param($installPath, $toolsPath, $package, $project)

$rootDir = (Get-Item $installPath).parent.parent.fullname

$deployTarget = "$rootDirDatabases"

$deploySource = join-path $installPath 'content'

if (!(test-path $deployTarget)) {

mkdir $deployTarget

}

Copy-Item "$deploySource/*" $deployTarget -Recurse -Force

$solution = Get-Interface $dte.Solution ([EnvDTE80.Solution2])

$deployFolder = $solution.Projects | where-object { $_.ProjectName -eq "Databases" } | select -first 1

if(!$deployFolder) {

$deployFolder = $solution.AddSolutionFolder("Databases")

}

$folderItems = Get-Interface $deployFolder.ProjectItems ([EnvDTE.ProjectItems])

ls $deployTarget | foreach-object {

$folderItems.AddFromFile($_.FullName) > $null

$item = $project.ProjectItems.Item($_.Name)

$copyToOutput = $item.Properties.Item("CopyToOutputDirectory")

$copyToOutput.Value = 1 #Copy always

$buildAction = $item.Properties.Item("BuildAction")

$buildAction.Value = 2 #Content

} > $null

So, when you install this to your testing project, it will copy the dacpac file (or files) into a subfolder called Databases and mark each file to be Copied Always into the build output during compilation. This last step will make it easier to access the file during test startup.

Now, there’s one more piece I added to version the dacpac file itself which will make things easier later. First, go to the properties of the Database Project and click the properties button under the Output types. Change the version to 1.0.0.1 and then click OK.

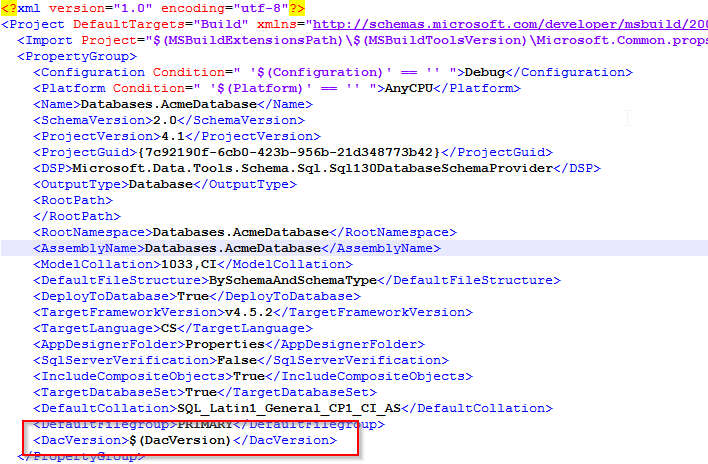

This will force it to add some properties to the sqlproj file. Now open the sqlproj file in your favorite text editor and make the following change:

Now, in the build step I pass the DacVersion in as an environment variable so that I can also stamp the version number into the dacpac file. This needs to be passed in during the MsBuild step which is typically done as an environment variable. In TeamCity, this can be done by adding env.DacVersion=%build.number% to your parameters. If you open the resulting dacpac as a zipfile, and look at the DacMetadata.xml file, you’ll see your shiny new version number… this will be important later.

I’m using the built-in nuget server on TeamCity to serve up the package. If you’re using something else like ProGet, you’ll have to deploy the package to your internal NuGet Server… it goes without saying you don’t want to deploy this to nuget.org.

Now we can install the package into our testing project. This is now a matter of launching the nuget package manager console, switch the project to your testing project and type:

install-package Databases.AcmeDatabase

You should now see the Databases folder added to your project with your dacpac file (or files… my install.ps1 will support multiple dacpacs if needed).

Now, to use that dacpac, we’re going to use a combination of SqlLocalDB (Microsoft’s replacement of SQL Express) and nUnit to bootstrap our local testing database.

First, in the app.config for your testing project, set your connection string to something like (this is stripped down to remove all the EF stuff):

connection string=&quot;data source=(localdb)MSSQLLocalDB;initial catalog=AcmeDatabase;

The important piece is the MSQLLocalDB… this is a dynamic local database that will spin up when needed. That’s all you’ve got to do [more here]

Now, we’ve got to bootstrap the initialization and application of the dacpac.

First, install that SSDT tools nuget package I mentioned earlier into your testing project… it’s here. I’m going to use those tools to open the dacpac file and deploy it to my localdb.

Install-Package Microsoft.Data.Tools.Msbuild

You can do this in a base class for all your tests, if you choose… in my case, I’m doing it in a non-namespaced class decorated with the SetUpFixture attribute… this guarantees this method will fire only once for the entire test assembly.

Here’s what it looks like:

using System;

using System.Configuration;

using System.Data.SqlClient;

using System.IO;

using Microsoft.SqlServer.Dac;

using NUnit.Framework;

// No namespace is intentional

// This means this fires once and only once for the entire assembly

[SetUpFixture]

public class TestDatabaseSetup

{

private string DatabaseConnectionString = @"Data Source=(LocalDB)mssqllocaldb; Initial Catalog=master;Integrated Security=True";

private string DatabaseTargetName = "AcmeDatabase";

private const string VersionTag = "DacVersion";

[SetUp]

public void SetupLocalDb()

{

var rebuildDatabase = true;

if (!rebuildDatabase)

{

return;

}

var upgradeExisting = false;

using (var connection = new SqlConnection(DatabaseConnectionString))

{

connection.Open();

var sql = string.Format("select name from sys.databases where name = '{0}'", DatabaseTargetName);

var cmd = connection.CreateCommand();

cmd.CommandText = sql;

var result = cmd.ExecuteScalar();

upgradeExisting = result != null;

cmd.Dispose();

}

var instance = new DacServices(DatabaseConnectionString);

var path = Path.GetFullPath(@"SSDT.Poseidon.dacpac");

var versionPresent = false;

using (var dacpac = DacPackage.Load(path))

{

var dacVersion = new Version(dacpac.Version.Major, dacpac.Version.Minor, dacpac.Version.Build);

if (upgradeExisting)

{

var dbVersion = new Version(0, 0, 0);

using (var connection = new SqlConnection(DatabaseConnectionString))

{

connection.Open();

var sql = string.Format("select value from {0}.sys.extended_properties where name = '{1}'", DatabaseTargetName, VersionTag);

var cmd = connection.CreateCommand();

cmd.CommandText = sql;

var result = cmd.ExecuteScalar();

if (result != null)

{

dbVersion = new Version(result.ToString());

versionPresent = true;

}

cmd.Dispose();

if (dacVersion <= dbVersion)

{

Console.WriteLine("Database {0}, Db Version {1}, Dac Version {2}... declining to apply dacpac", DatabaseTargetName, dbVersion, dacVersion);

return;

}

}

}

var options = new DacDeployOptions();

options.DropExtendedPropertiesNotInSource = false;

try

{

instance.Deploy(dacpac, DatabaseTargetName, upgradeExisting, options);

var procName = (upgradeExisting && versionPresent) ? "sys.sp_updateextendedproperty" : "sys.sp_addextendedproperty";

using (var connection = new SqlConnection(DatabaseConnectionString))

{

connection.Open();

var cmd = connection.CreateCommand();

cmd.CommandText = string.Format("EXEC {0}.{1} @name='{2}', @value='{3}'", DatabaseTargetName, procName, VersionTag, dacVersion.ToString());

cmd.ExecuteNonQuery();

cmd.Dispose();

}

}

catch (Exception ex)

{

Console.WriteLine(ex);

}

}

}

}

What this code does is connect to the LocalDb instance and then see if your target database is already present. If it’s not, it will deploy the dacpac in ‘create mode’, and then add an extended property to the database to tag it with our DacVersion.

If the database is already present, it will check its DacVersion against the version in the dacpac file. If the version in the dacpac is greater, it will deploy the dacpac in ‘update mode’. If it’s already up to date, it just leaves. This prevents this fairly expensive operation from running on every test run (and greatly annoying your engineers on their local machine runs).

More generally, it’s a good idea to start a DTC transaction and roll it back before and after each test… this ensures you don’t have tests that rely on data created by other tests (and making your test flaky). You can do that pretty simply as follows. Both your test setup database calls and any calls made through what you’re testing should enlist in the DTC transaction and everything will get rolled back after each test. This again ensures a pristine database for each test.

[SetUp]

public void SetUp()

{

scope = new TransactionScope();

}

[TearDown]

public void TearDown()

{

scope.Dispose();

}

Now you can start writing your tests against this local database instance. The first thing I realized at this point, is that I was missing a lot of metadata… highly static table data that I didn’t want to create at test time. The Database Project can handle this too. Go back to your database project, right-click on it and Add->New Item. Under ‘User Scripts’, choose ‘Post-Deployment Script’, name it whatever you want and click OK. It’s in here you can start accumulating your base metadata. I’ve made my scripts idempotent so I don’t accidentally accumulate extra metadata, but I’ll leave that exercise to you. When you build your new dacpac, it will include this script and execute it during the deploy step so you can avoid a lot of manual test data setup during test runs.

The last step, is to rebuild the database project anytime the database changes. You have two choices here:

- You can actually use the Database project as the ‘Source of Record’ for database changes. Just edit the scripts (table definitions, views, stored procedures) and check them in.

- You can do a Schema Compare from the dev database to your project, update the project, and then check in the resulting changes. There’s a ton of options in here. I chose to ignore users/permissions/roles to avoid some build problems I was having with them… play around with the options if needed.

In either case, the checkin should trigger a new build of the dacpac nuget package.

To consume this, add a build trigger from the dacpac build to rebuild any dependent testing project. In that testing project, include a nuget install step to do an update-package for the specific dacpac package. You can run this update every build… it just won’t do anything if there’s nothing new so there’s no harm. This is how you’ll get these important database changes into your CI workflow to see what they’ve broken.

On my side, I’m not quite ready to use this Database project as the source of truth just yet and, unfortunately, the SchemaCompare MSBuild target does not yet support updating Database projects outside of Visual Studio (sigh), so I’m stuck with manual updates for now. I compare to my dev database, update the project files and check them in. I’ve not yet got an obvious way to do this without command-line MSBuild support. If that feature gets added (there’s an active ticket here), I can run this as a regular nightly job.

There’s a heckuva lot going on here in what is, assuredly, my longest ever blog post … but I hope this gives you a lot of ideas about how to automate the construction of a testing database… and eliminate those flaky tests!

Nicely done. Thanks for taking the time to share.